About me

This is Zhouyu Zhang’s (章舟宇) website. Every thing is still under construction, myself as well. After obtaining my Bachelor’s Degree from Tsinghua University, Beijing, I now pursue my PhD in Robotics at Georgia Intitute of Technology. I am advised by Dr. Yue Chen, then Dr. Glen Chou and Dr. Frank (C.Y.) Chiu.

Research Interest

I work on optimization.

“People optimize. “ — Jorge Nocedal, Numerical Optimzation

Optimization provides a structural lens for solving complicated problems, both in the forward and inverse sense. Forward optimization is what you’d expect: decision-makers define objectives, accept some non-negotiable constraints, and produce strategies accordingly — for instance, shaping configuration spaces or generating control policies (hopefully not ones that make your robot explode). In the inverse sense, we observe the resulting behavior and reverse-engineer the likely goals and constraints — much like a diligent apprentice watching a master at work and trying to infer their intentions.

These two perspectives converge elegantly in robotics. My research leverages both: I use optimization not only to generate optimal behavior but also to recover the structural principles that govern it.

Robotic Applications

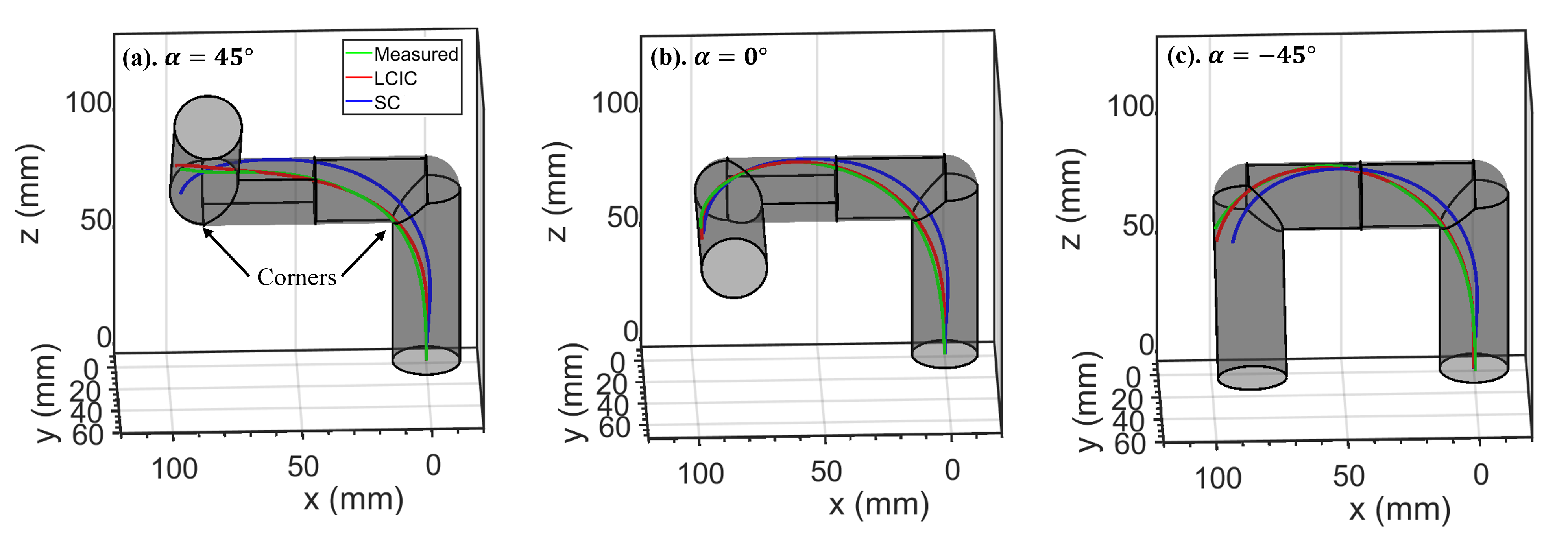

In the world of continuum robots, kinematics isn’t just hard — it’s soft, in all the worst ways. The actuation-to-task-space mapping is highly nonlinear, and the robots themselves are compliant, squishy entities that defy intuition. Solving their motion in free space often requires shooting methods, which are famously unstable and allergic to hard constraints. In this work, we proposed a more grounded approach: recasting kinematics as a constrained optimization problem with quadratic costs and nonlinear constraints. By exploiting local linearity in the actuation-to-task-space mapping, we generate reliable initial guesses and stable solutions.

Meanwhile, robots with simpler dynamics but more complex decision-making, like those in multi-agent systems, introduce a new challenge: inference. It’s not enough to compute optimal strategies — you must also deduce what others are optimizing for. Misjudging another robot’s goals or proximity tolerance might lead to a dangerous (or, depending on your mood, adorable) fender-bender. In this work, we formalized the inverse problem: learning the hard constraints respected by other agents, based on their locally optimal trajectories. Using tools from game theory and inverse optimal control, we showed that these constraints can, in fact, be exactly recovered.

Many questions remain unanswered — structurally, philosophically, and computationally. You can find a collection of such questions here. They keep me up at night.